1 Introduction¶

This document describes the decisions made in the course of implementing the Data Backbone design from DMTN-122.

2 Replication and Transport¶

The Data Backbone (DBB) will be used to move file/object data for production purposes between Data Facilities, including the US Data Facility (USDF), French Data Facility (FrDF), and UK Data Facility (UKDF). It will also be used to move “offline”, non-time-sensitive raw data from the Observatory in Chile to the USDF and potentially to the FrDF. Data products to be served directly from Data Access Centers in Chile or elsewhere (IDACs) will be moved via the DBB, as will data products sent to other partners, as described in DMTN-147.

These large-scale movements will be coordinated via Rucio,

Policies will be used to direct transfers from appropriate sources and to appropriate destinations. For example, the UKDF may obtain its data from the FrDF rather than from the USDF or the Observatory. The UKDF will not receive all raw images but instead will only receive images from a certain pre-designated portion of the sky. Appropriate metadata labels for the raw image files, as well as policies using those labels, will be configured into Rucio to enable this restrictive data transfer.

Deciding that intermediate data products are no longer needed will be handled by workflow execution or campaign management mechanisms. Rucio will be used to execute this removal across all replicas.

Download of files to end users will be provided by Virtual Observatory and/or other services within the Rubin Science Platform; it is not expected to be handled by Rucio.

Tiering of files to nearline or archival storage is handled by storage infrastructure, not Rucio.

Similarly, in a hybrid cloud model where permanent storage is on-premises but a cache is maintained in the cloud to improve latency for users, cache maintenance is the responsibility of a dedicated caching service, not Rucio.

3 Location and Metadata¶

3.1 Overview¶

The USDF will serve as the Rucio site. Rucio will maintain the global state of replication of files/objects across sites in a single central database. Rucio services will be used to transfer files to Rucio Storage Elements (RSE) at each site. Note that sites with RSEs will include the Data Facilities for Data Release Production but will also include the Chilean Data Access Center and may also include Independent Data Access Centers (IDACs) that require copies of data products. Rules set in Rucio determine how files are transferred between RSEs.

Each site has storage (file systems, object stores, tape, etc.) registered with Rucio as one or more RSEs, and each site (except possibly IDACs) will have its own local Butler registry. This registry will maintain a view of all local datasets. There are RSEs at the USDF, Chile, United Kingdom Data Facility (UKDF), and French Data Facility (FrDF). No Rucio services are running at the remote sites. All Rucio commands must call back to the USDF to perform actions.

After any action in Rucio is completed, it is logged in a Rucio database at the USDF. Rucio has a daemon, Hermes, which periodically takes new database entries, changes each one to a message, and sends the message to a message broker. Monitoring services generally use this message stream.

DBB requires that some files be automatically ingested into Butler repositories at the RSE sites after completing a Rucio transfer. We can trigger this ingestion by receiving Rucio messages, examining the message’s contents, and sending a message to a service running at the DF, which will ingest the file into a Butler repository at that site. The metadata for this ingestion includes the universal unique identifier (UUID), values for data identifier dimension components, the name of the run collection that “owns” the dataset, and eventually, provenance information detailing how the dataset was created. This metadata will be obtained from the files themselves, if they are self-describing, such as raw image files, or else from separate JSON or YAML documents (or in the message to the broker itself), with one per file or one per batch. The exact mechanism for generating this “sidecar” metadata is TBD.

Note that Registries do not communicate directly with each other. In particular, there is no database-to-database replication associated with Butler Registries.

Also, note that there are files that are part of the permanent survey record that are not Butler datasets. These files are also transferred via Rucio according to policy, primarily to the FrDF.

3.2 Message Broker Topology¶

A message broker runs at the USDF and each RSE site. The remote sites will maintain a connection to the USDF, replicating the message stream intended for that site’s Butler Ingest service. We intend to use Kafka to achieve this replication.

We’ve chosen to use Kafka instead of ActiveMQ to replicate messages between sites, even though this would mean modifying the standard Hermes client. We’ve successfully deployed other services that use similar Kafka message replication and believe it will work well here. The modifications to Hermes to send messages to Kafka is straightforward.

3.3 Message Filtering¶

Rucio event messages contain the creation date, the event type, and a payload. We will send messages to specific RSE site brokers by filtering on the “transfer-done” type and determining the destination RSE given in the message payload. The Kafka topic name we will write to will be the same as the RSE name, making it easier to configure the remote brokers.

When the standard Hermes client sends a Rucio event message to a message broker, it can be read by a single client. If more than one client tried to read from this stream, messages would be split between the clients, and no client would be able to read all messages.

We will add Kafka support to Hermes, and make modifications to how and where Hermes sends messages. Our modified Hermes client will send all messages to a stream intended for a logging service via ActiveMQ. It will also filter messages and send messages onto RSE-specific Kafka topics. If it is determined that additional information is required for ingestion, that information will be read locally and added to the messages destined for the RSE.

Kafka brokers at each RSE site are configured to mirror topics for their RSEs, limiting the messages to only those actionable by that RSE site’s Butler Ingest service.

3.4 Message Reception¶

When Rucio replicates a file successfully at the RSE, it generates a “transfer-done” message; it is filtered at the USDF broker, which sends the message to the RSE site’s message broker. The Butler Ingest client then reads it.

The Butler Ingest client parses the message, determines the file to ingest, and ingests the file into the local Butler. The Butler Ingest service then waits for the following message from the broker.

3.5 Federated Message Broker Diagram¶

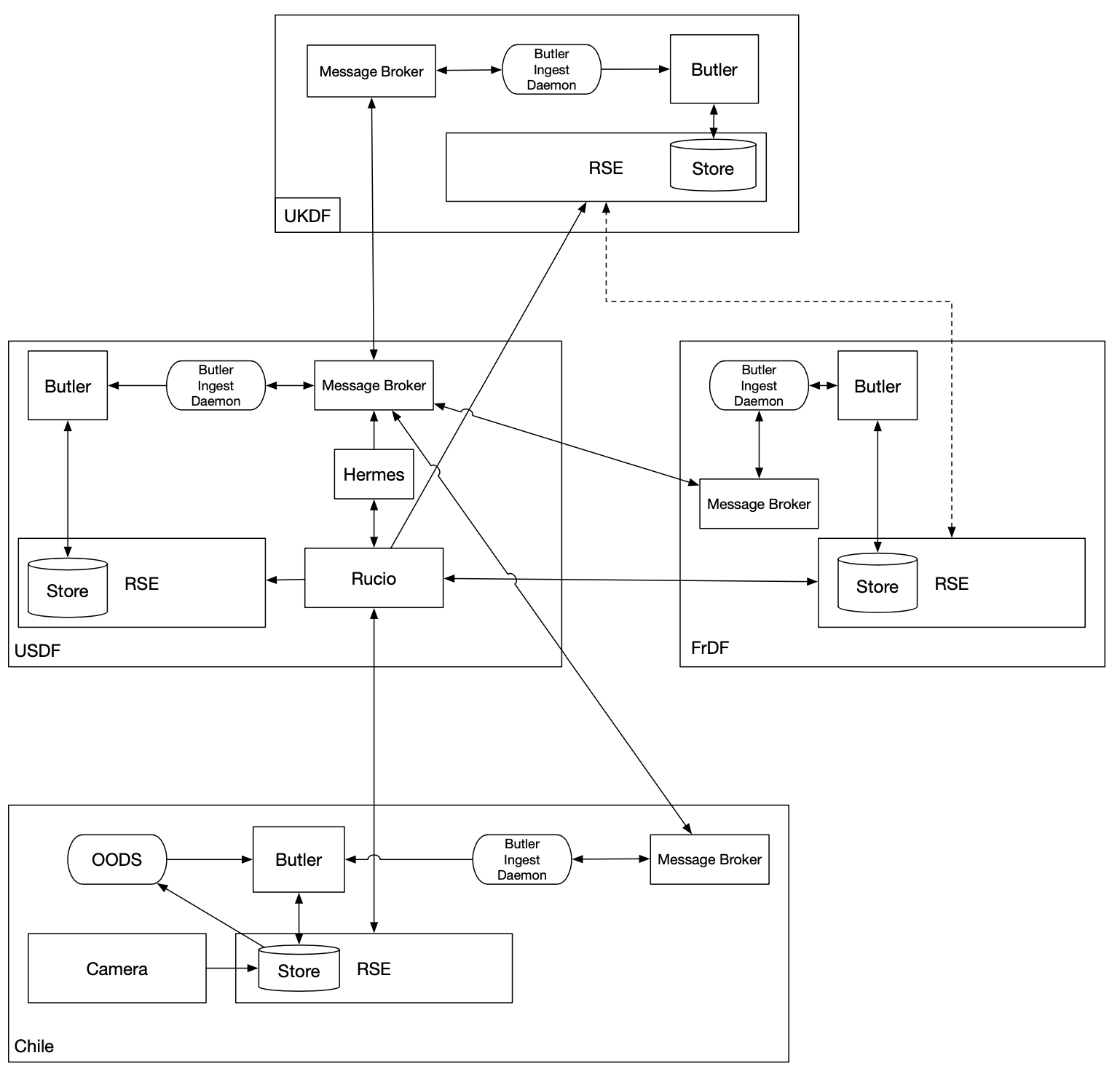

Figure 1 Federated Message Broker Diagram

This diagram shows the file transfer paths and messaging paths for DBB services. The diagram also shows the federation of message brokers, one at each satellite DF connected to the primary message broker at the USDF.

All file state changes in a local RSE are transmitted from that site using the Rucio utilities (or APIs) to communicate to Rucio at the USDF. This activity happens in all cases. For example, when a file changes state in RSE at UKDF, it must register directly to the USDF; it doesn’t proxy through the FrDF, even though the UKDF will be transferring files to the FrDF, not the USDF directly.

Each satellite site has a Butler ingest daemon that reads messages from the local broker and ingests files into the Butler at that site. The Butler ingest daemon should batch incoming messages so ingests can be grouped.

4 Files¶

Most files are expected to be stored in an object store at each location. Some locations may choose to use a filesystem instead.

The Large File Annex is currently thought of as containing two types of files: one type that is ingested into a Butler and used as a dataset and another type that remains as a read-only object only.

5 Databases¶

Qserv databases are not part of the DBB. Instead, canonical Parquet files copied via the DBB are transformed, partitioned, and ingested into local Qservs.

The Alert Production Database is internal to the Alert Production and resides only at the USDF.

The Prompt Products Database (including Solar System Objects), the Transformed Engineering and Facilities Database, the Exposure Log, and any other databases within the Consolidated Database are replicated to other Data Access Centers via native database replication technology.